F# and Gradient Descent: How to find the minimum of a function in a few minutes?

Gradient – a pinch of theory

Gradient is a mathematical concept from the field of functional analysis, representing the vector of partial derivatives of a function of several variables. In a simplified form, the gradient is a vector indicating the direction of the fastest increase of a function. It can be used to find extrema of a function – for example, its minimum. To do this, one needs to calculate the gradient of the function at the point of interest and then move in the direction opposite to the gradient until we reach the point where the gradient is zero – this means that we have reached the minimum.

Gradient is also an important concept in machine learning. It allows models to adapt to input data and minimize error. In practice, the gradient can be used for various purposes, such as updating weights in neural networks, optimizing cost functions, or regularizing models. Thanks to the gradient, machine learning algorithms can predict output for new data and provide accurate results. However, it is important to note that calculating the gradient can be time-consuming, especially for large datasets.

To better understand how the gradient works, I will present a simple example of computing the minimum of a function.

F#

To better visualize this concept, it is worth using a tool that seems to work great in the environment of numerical methods, functions, and optimization algorithms – the F# language.

F# is a so-called “functional first programming language” which, although it allows creating code consistent with, for example, the object-oriented paradigm, reveals its true “power” when we stick to the functional approach. It is a strongly typed language that provides interoperability – we can use the rich ecosystem of .NET libraries and tools as well as a mature model of asynchronicity.

Implementation

We will use F# Interactive, an environment that allows executing F# code in interactive mode. Let’s start by defining dependencies.

#r "nuget: Plotly.NET"

open System

open Plotly.NETFor the purposes of this example, let’s define a function whose minimum we will be looking for:

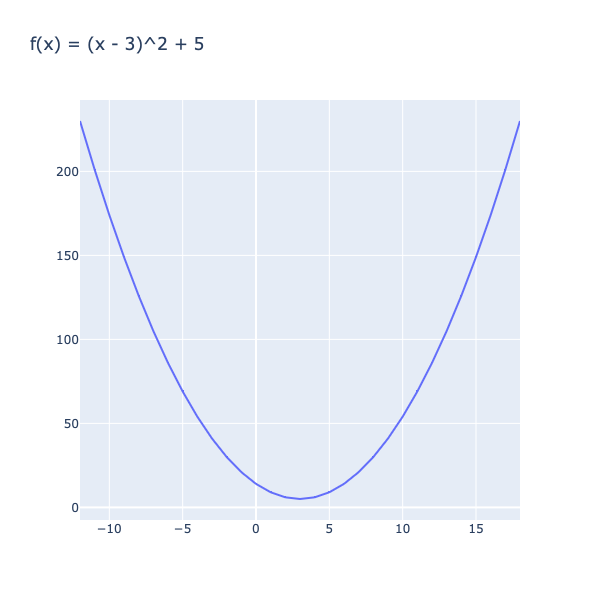

// f(x) = (x - 3)^2 + 5

let f x = (x - 3.) ** 2. + 5.For clarity, let’s visualize it (using the Plotly.NET library):

[ -12 .. 18 ]

|> List.map (fun x -> (float x, f (float x)))

|> Chart.Line

|> Chart.withTitle "f(x) = (x - 3)^2 + 5"

|> Chart.show

We will use the gradient method to find x for which our function reaches its minimum.

The first step will be to determine the derivative of the function under investigation:

// f'(x) = 2(x - 3)

let dx_f x = 2. * (x - 3.)Here’s how the algorithm will work to help us locate such a minimum:

- Start by generating a pseudo-random value X

- Determine the so-called learning rate (alpha)

- Set the number of iterations

- Calculate the gradient of the function for X – i.e., the value of its derivative at this point

- Move our X in the negative direction of the gradient – i.e., in the direction of function descent. This will come down to subtracting from X the gradient multiplied by the learning rate

- Steps 4 and 5 will be repeated until the assumed number of iterations is reached

// 1

// initial guess

let rnd = Random(532123)

let x = rnd.NextDouble() * 100.

// 2

// learning rate

let alfa = 0.001

// 3

// iterations

let i = 10_000

// 4 - 5

// performs a single step of gradient descent by calculating the current value of x

let gradientStep alfa x =

let dx = dx_f x

// show the current values of x and the gradient dx_f(x)

printfn $"x = %.20f{x}, dx = %.20f{dx}"

x - alfa * dx

// 6

// uses gradientStep to find the minimum of f(x) = (x - 3)^2 + 5

let findMinimum (alfa: float) (i: int) (x: float) =

let rec search x i =

if i = 0 then x else search (gradientStep alfa x) (i - 1)

search x iThe only thing left is to call the implemented function with the previously defined parameters:

findMinimum alfa i x |> printfn "'f(x) = (x-3)^2 + 5' reaches a minimum at 'x' = %.20f"to obtain a result similar to:

'f(x) = (x-3)^2 + 5' reaches a minimum at 'x' = 3.00000004479085946585Of course, for such simple functions, it is easier to find the minimum using the zero point of the derivative. However, in more complex problems, such as linear regression where we will minimize the error function, the gradient will work great.

An example of such regression coming soon…

Useful links

- F# and linear regression

- https://fsharp.org

- https://fsharpforfunandprofit.com

- https://learn.microsoft.com/pl-pl/dotnet/

- https://ocw.mit.edu/courses/18-01sc-single-variable-calculus-fall-2010/

Complete code

[gist id=”d1eaf03f5fa6585dfc2ef6d7e4fedd61″]